Many current machine learning

algorithms lack provable guarantees on one or more

of the following metrics: solution

quality, sample complexity, running

time. The

goal of our group is to provide algorithms with such

performance guarantees. This can involve designing

fundamentally new algorithms, or proving such

guarantees for existing algorithms.

A lot of our work has been

devoted to obtaining better mathematical

understanding of deep learning. We seek to open this

"black box" with respect to optimization,

generalization, and interpretability.

We are especially interested in unsupervised

learning, where the algorithm is given data that is

unlabeled/unannotated, and the goal is to learn

structure in the data. Our group haas done

work on language models (including topic models,

text embeddings and language models), matrix and

tensor factorization, sparse coding, Generative

Adversarial Nets (GANs).

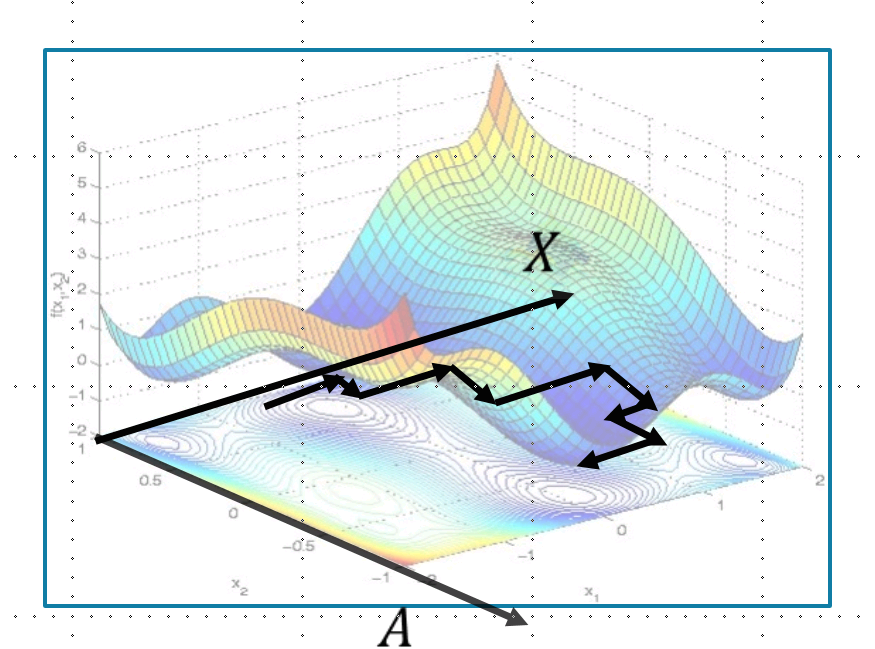

From the viewpoint of theoretical CS and traditional algorithm design, the novelty in this project is that one obtains new performance on problems whose worst-case instances is intractable. So one has to go beyond worst-case analysis.